With the General Availability announcement of AKS Automatic I thought it would be a good time to get some hands on experience with the new service by deploying an AKS Automatic instance. In this series of posts I’ll be walking through the deployment of a private AKS Automatic cluster into a customer managed virtual network.

This post focuses on the prerequisites required to deploy an AKS Automatic cluster and will address:

- Why a Private AKS Cluster

- Why a Customer-Managed Virtual Network Instead of a Microsoft-Managed Virtual Network?

- Before Jumping to Lightspeed (aka Prerequisites)

- Review vCPU quota

- Register Microsoft Container Service resource provider

- Create a dedicated resource group to host the AKS cluster resources

- Create a User Assigned Managed Identity for the AKS cluster

- Assign the required Azure roles to the User Assigned Managed Identity

- Delegate the API Server subnet to support the AKS control plane

Why a Private AKS Cluster

Through my extensive experience working with enterprise customers, it is uncommon to see a production Azure Kubernetes Service (AKS) cluster with its Kubernetes API server exposed to the public internet. In practice, production clusters are almost always deployed as private clusters to align with enterprise security, compliance, and networking requirements:

- Elimination of Public Control Plane Exposure

The Kubernetes API server is accessible only via a private endpoint, significantly reducing the external attack surface. - Stronger Security and Zero-Trust Alignment

Private clusters enforce network isolation by default and integrate cleanly with zero-trust architectures, centralized firewalls, and network inspection controls. - Compliance and Regulatory Requirements

Many regulated industries require that management endpoints are not publicly accessible, making private clusters a necessity for compliance and audit readiness. - Predictable Network Egress and Traffic Inspection

All ingress and egress traffic can be routed through approved security appliances, enabling consistent inspection, logging, and policy enforcement.

Why a Customer-Managed Virtual Network Instead of a Microsoft-Managed Virtual Network?

Unless an AKS cluster has no requirement to access resources within a customer’s network, deploying the cluster into a customer-managed virtual network is typically the preferred approach.

A customer-managed virtual network provides the simplest and most secure method for enabling connectivity to dependent services, including databases, private endpoints, on-premises systems, and shared platform services. It allows customers to retain full control over network design, address space, routing, security boundaries, and traffic inspection.

By contrast, Microsoft-managed virtual networks are best suited to isolated scenarios where no inbound or outbound connectivity to customer-owned resources is required.

A Long Time Ago (aka Today’s Setup)

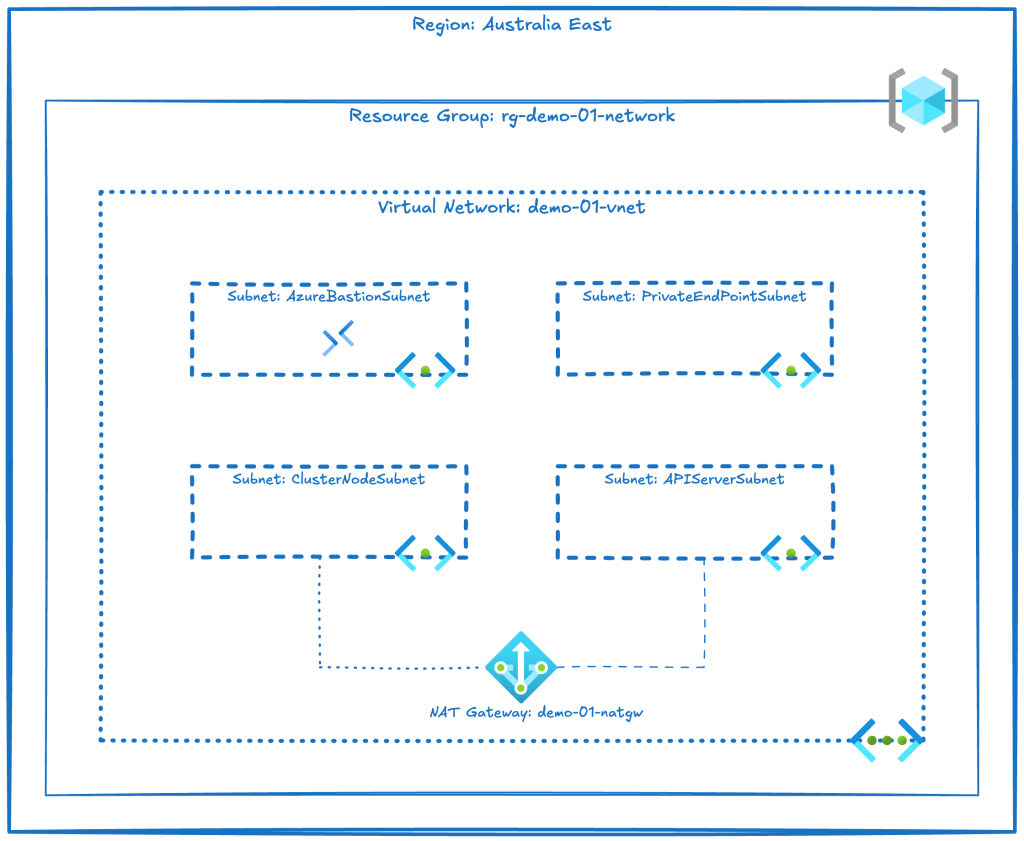

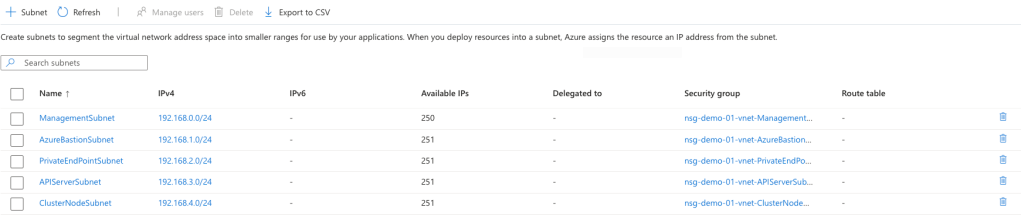

The following resources have already been deployed within my environment:

- A resource group (rg-demo-01-network) that contains networking resources

- A virtual network (demo-01-vnet) containing:

- Azure Bastion subnet (AzureBastionSubnet)

- Private Endpoint subnet (PrivateEndPointSubnet)

- Cluster node subnet (ClusterNodeSubnet)

- API server subnet (APIServerSubnet)

- Azure NAT Gateway (demo-01-natgw)

- Azure Bastion service (bst-demo-01)

Before Jumping to Lightspeed (aka Prerequisites)

Before commencing the deployment of AKS Automatic, the following prerequisites must be completed:

- Review vCPU quota

- Register Microsoft Container Service resource provider

- Create a dedicated resource group to host the AKS cluster resources

- Create a User Assigned Managed Identity or the AKS cluster

- Assign the required Azure roles to the User Assigned Managed Identity

- Delegate the API Server subnet to support the AKS control plane

Review vCPU quota

Review and confirm that the target subscription has sufficient vCPU quota available to support the initial cluster deployment. If the subscription does not have sufficient quota, the deployment will fail with an error similar to the one shown below.

Register Microsoft Container Service resource provider

To register the Microsoft Container Service resource provider use the following Azure CLI command:

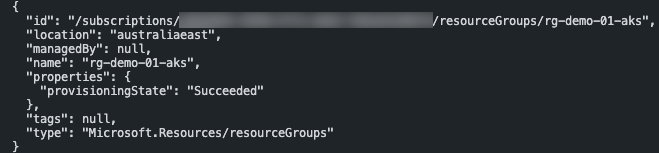

az provider register --namespace Microsoft.ContainerServiceCreate a dedicated resource group to host the AKS cluster resources

To create a dedicated resource group for the AKS cluster resources, use the following Azure CLI command:

RG_NAME='rg-demo-01-aks'

LOCATION='Australia East

az group create -n $RG_NAME -l $LOCATION

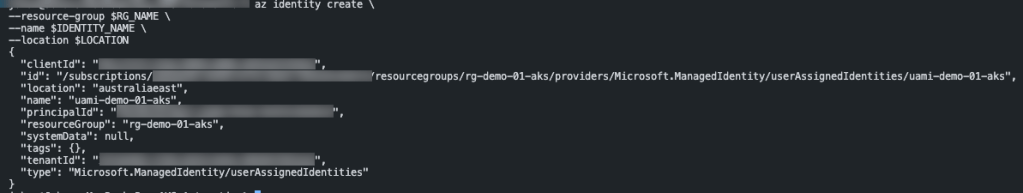

Create a User Assigned Managed Identity for the AKS cluster

To create a User Assigned Managed Identity (UAMI) that will be used to deploy and manage the AKS cluster resources, use the following Azure CLI command:

RG_NAME='rg-demo-01-aks'

LOCATION='Australia East'

IDENTITY_NAME='uami-demo-01-aks'

az identity create \

--resource-group $RG_NAME \

--name $IDENTITY_NAME \

--location $LOCATION

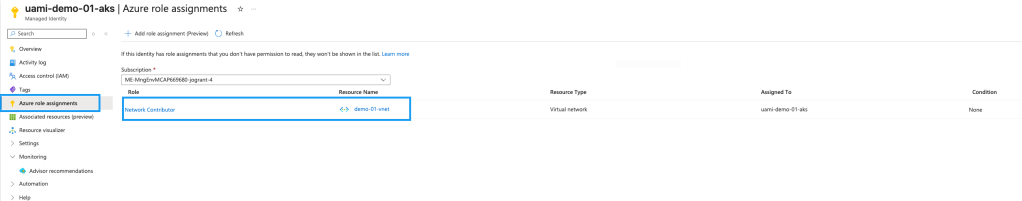

Assign the required Azure roles to the User Assigned Managed Identity

The UAMI cluster identity requires the following roles to be assigned to it:

- Network Contributor built-in role assignment on the API server subnet

- Network Contributor built-in role assignment on the virtual network to support Node Autoprovisioning

Use the following Azure CLI commands to assign the Network Contributor role to the cluster User Assigned Managed Identity (UAMI):

RG_NAME='rg-demo-01-aks'

RG_NETWORK_NAME='rg-demo-01-network'

LOCATION='Australia East'

IDENTITY_NAME='uami-demo-01-aks'

VNET_NAME='demo-01-vnet'

IDENTITY_PRINCIPAL_ID=$(az identity show \

--resource-group $RG_NAME \

--name $IDENTITY_NAME \

--query principalId \

-o tsv)

NETWORK_ID=$(az network vnet show \

--resource-group $RG_NETWORK_NAME \

--name $ \

--query id \

--output tsv)

az role assignment create \

--scope $NETWORK_ID \

--role "Network Contributor" \

--assignee $IDENTITY_PRINCIPAL_ID

If you browse to the managed identity in the Azure Portal and click on Azure Role Assignments you can see the role and scope.

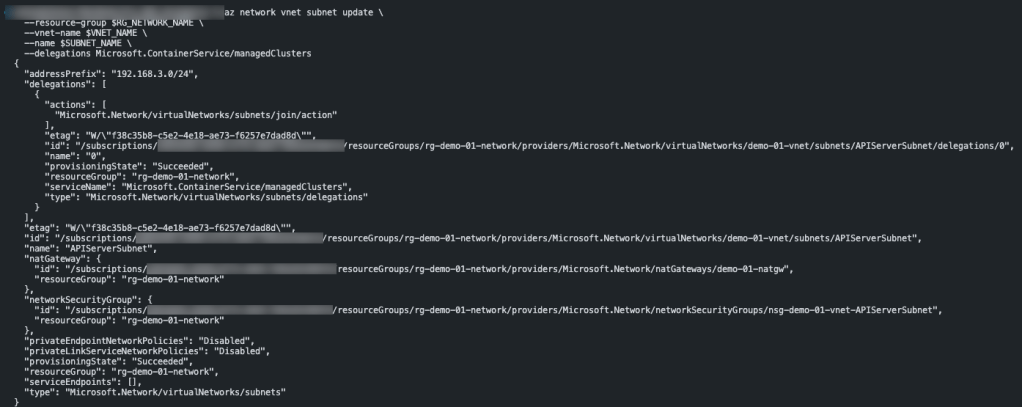

Delegate the API Server subnet to support the AKS control plane

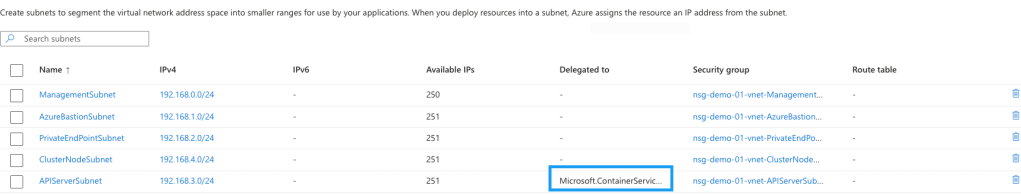

The dedicated API Server subnet must be delegated to Microsoft.ContainerService/managedClusters. As shown in the image below, the APIServerSubnet is currently not delegated.

To delegate the subnet, use the following command:

RG_NETWORK_NAME='rg-demo-01-network'

VNET_NAME='demo-01-vnet'

SUBNET_NAME='APIServerSubnet'

az network vnet subnet update \

--resource-group $RG_NETWORK_NAME \

--vnet-name $VNET_NAME \

--name $SUBNET_NAME \

--delegations Microsoft.ContainerService/managedClusters

After refreshing the subnet page, you can see that the APIServerSubnet has now been successfully delegated.

Now that the prerequisites have been completed we can move onto deploying the cluster.

Leave a comment